New features enable companies to discover unsafe AI models in the software development pipeline for swift remediation, ensuring the deployment of compliant and secure code

BOSTON, May 7, 2024 /PRNewswire/ -- Legit Security, the leading platform for enabling companies to manage their application security posture across the complete developer environment, today announced new capabilities that allow customers to discover unsafe AI models in use throughout their software factories. These new capabilities provide actionable remediation steps to reduce AI supply chain security risk across the software development lifecycle (SDLC).

Organizations increasingly use third-party AI models to gain a competitive advantage by accelerating development. Still, from security vulnerabilities and training data to the method of storing and managing third-party AI models, outsourcing comes with risks. For example, in late 2023 Legit's research team reported on the potential damage of AI supply chain attacks, such as "AI-Jacking."

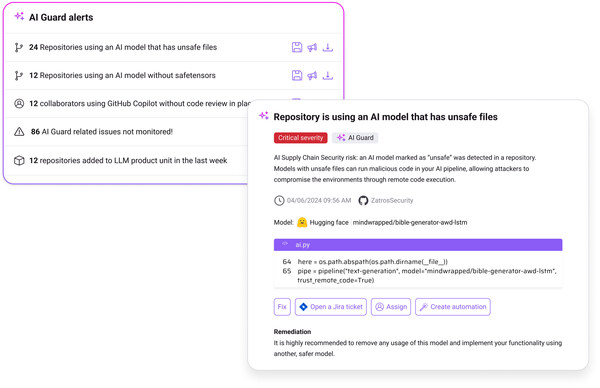

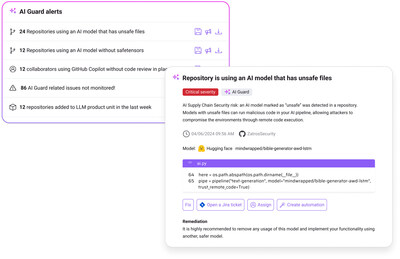

Legit's expanded capabilities go beyond the visibility layer of identifying which applications utilize AI, LLMs, and MLOps by quickly detecting risks in AI models used throughout the SDLC. For security and development teams, this offers an essential tool against AI supply chain security risks by empowering organizations to flag models with unsafe files, insecure model storage, or a low reputation. Initially, Legit covers the market-leading HuggingFace AI models hub.

Legit's latest innovation in enabling the safe use of AI in software development complements other features announced in February 2024, including the ability to discover AI-generated code, enforce policies such as requiring human review of AI-generated code, and enact guardrails that prevent vulnerable code from going to production,

"The release of LLMs and new AI models triggered the generative AI boom and started 'AI-native' application development. We are seeing an explosive growth in the integration and usage of third-party AI models into software development. Nearly every organization uses models within the software they release," said Liav Caspi, Chief Technology Officer at Legit. "This trend comes with risks, and to guard against them companies must have in place a responsible AI framework with continuous monitoring and adherence to best practices to protect their development practices from end-to-end."

Key benefits of Legit's expanded AI discovery capabilities include:

- Reduction of risk from third-party components in the developer environment

- Alerts and prompts when a developer is using an unsafe model

- Protection of the AI supply chain

Resources

- Read the Legit research team's latest blog, https://www.legitsecurity.com/blog/how-to-reduce-the-risk-of-using-external-ai-models-in-your-sdlc, which delves into the potential pitfalls and considerations businesses must navigate when leveraging external AI models.

- To learn more about Legit's support for reducing the risk of using external third-party AI models in your SDLC, please visit https://www.legitsecurity.com/ai-discovery.

- For further details on the broader Legit platform, visit www.legitsecurity.com.

- Get a demo at Legit's RSA Booth #232 or visit https://www.legitsecurity.com/events for more information about Legit at the 2024 RSA Conference.

About Legit Security

Legit is a new way to manage your application security posture for security, product and compliance teams. With Legit, enterprises get a cleaner, easier way to manage and scale application security, and address risks from code to cloud. Built for the modern SDLC, Legit tackles the toughest problems facing security teams, including GenAI usage, proliferation of secrets and an uncontrolled dev environment. Fast to implement and easy to use, Legit lets security teams protect their software factory from end to end, gives developers guardrails that let them do their best work safely, and delivers metrics that prove the success of the security program. This new approach means teams can control risk across the business – and prove it.

Media Contact:

Michelle Yusupov

Hi-Touch PR

443-857-9468

yusupov@hi-touchpr.com

SOURCE Legit Security